Getting Containers for AI-LAB

Most applications on AI-LAB run inside containers - self-contained environments that include all the software and dependencies you need. AI-LAB uses Singularity to run containers.

What is a Container?

A container is like a pre-packaged software environment that includes:

- The application (Python, PyTorch, TensorFlow, etc.)

- All required libraries and dependencies

- System tools and configurations

- Everything needed to run your code

Think of it as a complete, portable computer environment that works the same way every time.

Three Ways to Get Containers

- Use pre-downloaded containers - Quickest option

- Download containers - For specific versions

- Build your own container - For custom environments

Which Method Should You Choose?

| Method | When to Use | Time Required | Difficulty |

|---|---|---|---|

| Pre-downloaded | Getting started, common frameworks | Instant | Easy |

| Download | Need specific version, latest updates | 10-20 minutes | Easy |

| Build | Custom requirements, specific packages | 30+ minutes | Advanced |

1. Pre-downloaded Containers

The easiest way to get started is using containers that are already available on AI-LAB. These are stored in /ceph/container and are regularly updated.

Available Containers

Check what's available:

ls /ceph/container

Common containers include:

- Python: Basic Python environment

- PyTorch: Deep learning with PyTorch

- TensorFlow: Deep learning with TensorFlow

- MATLAB: MATLAB computational environment

Using Pre-downloaded Containers

You can use these containers directly by referencing their full path:

# Example: Using PyTorch container

/ceph/container/pytorch/pytorch_24.09.sif

Finding the Right Container

To see what's in each container directory:

ls /ceph/container/pytorch/ # See available PyTorch versions

ls /ceph/container/tensorflow/ # See available TensorFlow versions

2. Download Containers

If you need a specific version or container not available in the pre-downloaded collection, you can download containers from online repositories.

Popular Container Sources

- NVIDIA NGC Catalog: Optimized containers for AI/ML

- Docker Hub: Large collection of community containers

Step 1: Find Your Container

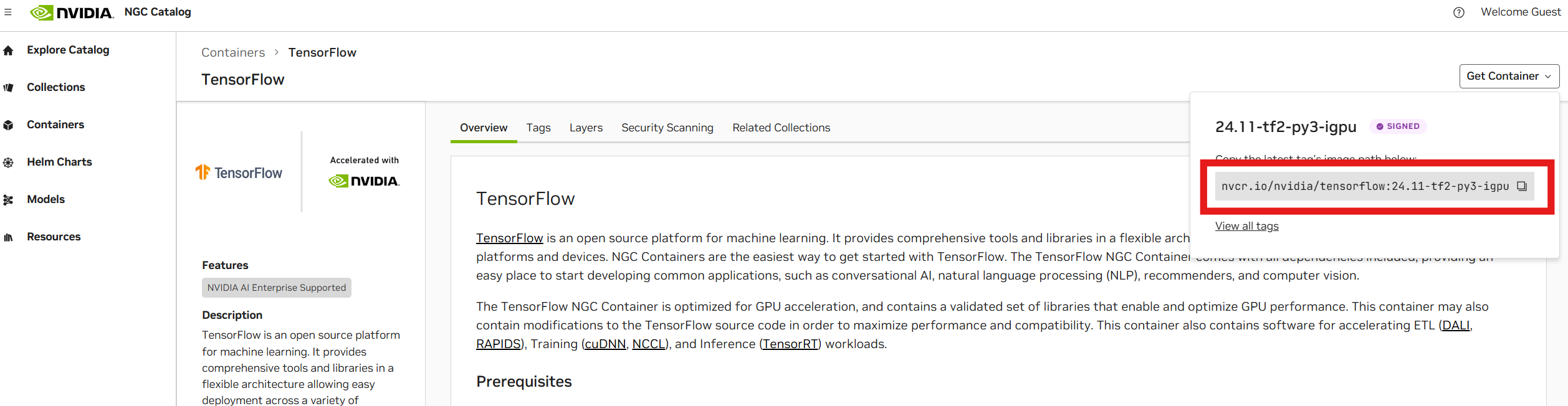

NVIDIA NGC Catalog:

- Visit NGC Catalog

- Search for your framework (e.g., "TensorFlow", "PyTorch")

- Click "Get Container" to get the URL

- Copy the URL (e.g.,

nvcr.io/nvidia/tensorflow:24.11-tf2-py3)

Docker Hub:

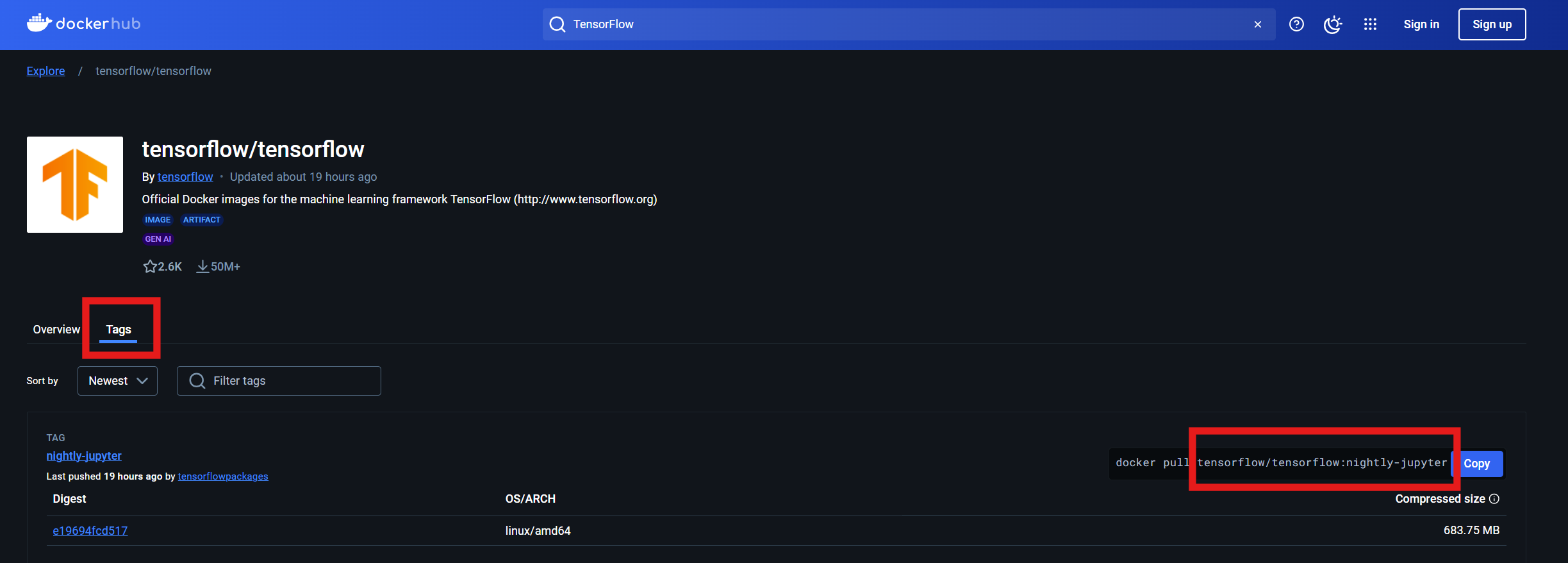

- Visit Docker Hub

- Search for your container

- Click on "Tags" to see available versions

- Copy the URL (e.g.,

tensorflow/tensorflow:nightly-jupyter)

Step 2: Set Up Singularity Environment

Before downloading, configure Singularity for optimal performance:

# Set up directories for Singularity

export SINGULARITY_TMPDIR="$HOME/.singularity/tmp/"

export SINGULARITY_CACHEDIR="$HOME/.singularity/cache/"

# Create the directories

mkdir -p $SINGULARITY_CACHEDIR $SINGULARITY_TMPDIR

Step 3: Download the Container

Use srun to download the container (this may take 10-20 minutes):

# Example: Download TensorFlow container

srun --mem 40G singularity pull docker://nvcr.io/nvidia/tensorflow:24.03-tf2-py3

Command explanation:

srun --mem 40G: Run on compute node with 40GB memorysingularity pull: Download and convert containerdocker://: Indicates this is a Docker container URL

Step 4: Use Your Downloaded Container

After download completes, you'll find a .sif file in your current directory:

ls *.sif # List downloaded containers

Use it just like pre-downloaded containers:

# Example usage

srun singularity exec --nv tensorflow_24.03-tf2-py3.sif python my_script.py

3. Build Your Own Container (Advanced)

For specialized requirements or custom environments, you can build your own containers using Singularity definition files.

Step 1: Create a Definition File

Create a Singularity definition file (.def) that describes your container:

nano my_container.def

Here's a simple example for a Python container:

Bootstrap: docker

From: ubuntu:20.04

%post

# Update system

apt-get update

apt-get upgrade -y

# Install Python and pip

apt-get install -y python3 python3-pip

# Upgrade pip

pip install --no-cache-dir --upgrade pip

# Install Python packages

pip install --no-cache-dir numpy matplotlib torch

%test

# Test that Python works

python3 --version

python3 -c "import numpy, matplotlib, torch; print('All packages imported successfully')"

Definition file sections:

- Bootstrap: docker: Use Docker as the base

- From: ubuntu:20.04: Base operating system

- %post: Commands to run during build

- %test: Commands to test the container

Step 2: Set Up Environment

Configure Singularity for building:

# Set up directories

mkdir -p $HOME/.singularity/tmp

mkdir -p $HOME/.singularity/cache

export SINGULARITY_TMPDIR=$HOME/.singularity/tmp

export SINGULARITY_CACHEDIR=$HOME/.singularity/cache

export TMPDIR=$HOME/.singularity/tmp

export TEMP=$HOME/.singularity/tmp

export TMP=$HOME/.singularity/tmp

Step 3: Build the Container

Build your container using srun:

srun --mem=40G singularity build --fakeroot --tmpdir $SINGULARITY_TMPDIR my_container.sif my_container.def

This process may take 10-30 minutes depending on the complexity.

Step 4: Test Your Container

Test that your container works:

# Test basic functionality

srun singularity exec my_container.sif python3 --version

# Test package imports

srun singularity exec my_container.sif python3 -c "import torch; print('PyTorch version:', torch.__version__)"

Step 5: Use Your Container

Use your custom container just like any other:

srun singularity exec --nv my_container.sif python3 my_script.py

Tips for Building Containers

- Start simple: Begin with basic containers and add complexity gradually

- Use

--no-cache-dir: Prevents pip from storing package files - Test thoroughly: Use the

%testsection to verify everything works - Document your choices: Add comments explaining why you chose specific versions

Advanced Definition File Options

For more complex containers, you can use additional sections:

%environment: Set environment variables%runscript: Define what happens when the container runs%labels: Add metadata to your container%help: Add help text

See the Singularity documentation for complete details.

You are now ready to proceed to learn about using containers to run jobs