AI Cloud

Introduction

AI Cloud is a GPU cluster made up of a collecton of NVIDIA GPU's, designed for processing GPU-demanding machine learning workloads. The platform is accessed through a terminal application on the user's local machine. From here the user logs in to a front end node, where files management and job submission to the compute nodes takes place.

Getting Started

How to access

Learn how to access AI Cloud

Guides for AI Cloud

Learn the basics on how to use AI Cloud

Terms and Conditions

Get an overview of the Terms and Conditions for AI Cloud

Key Features

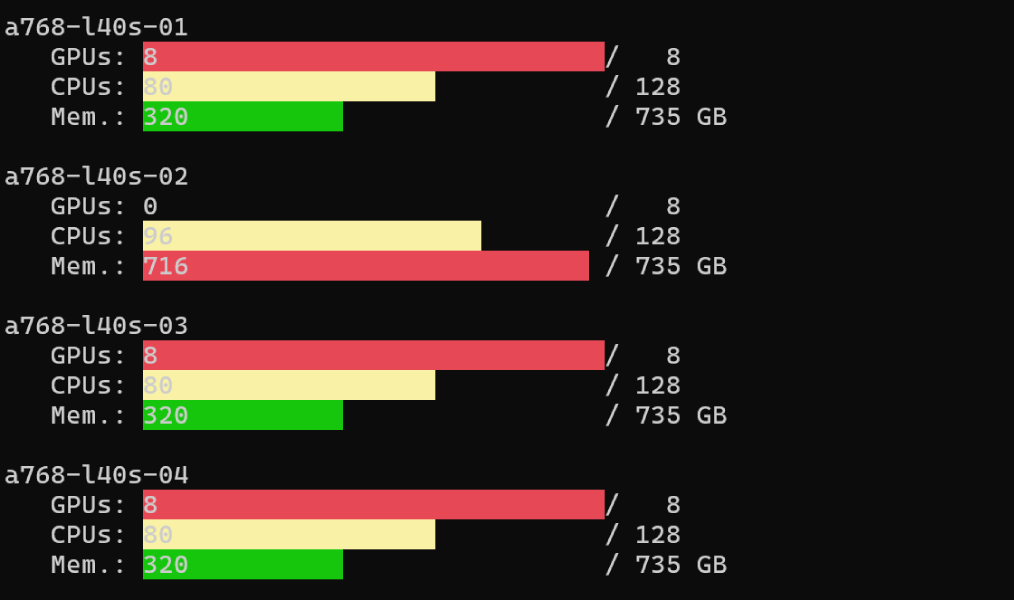

High-Performance GPU Cluster

Harness powerful NVIDIA GPUs for efficient processing of large datasets and complex models.

Containerization for Flexibility

Ensure consistent software environments across nodes, supporting diverse and customizable computational workflows.

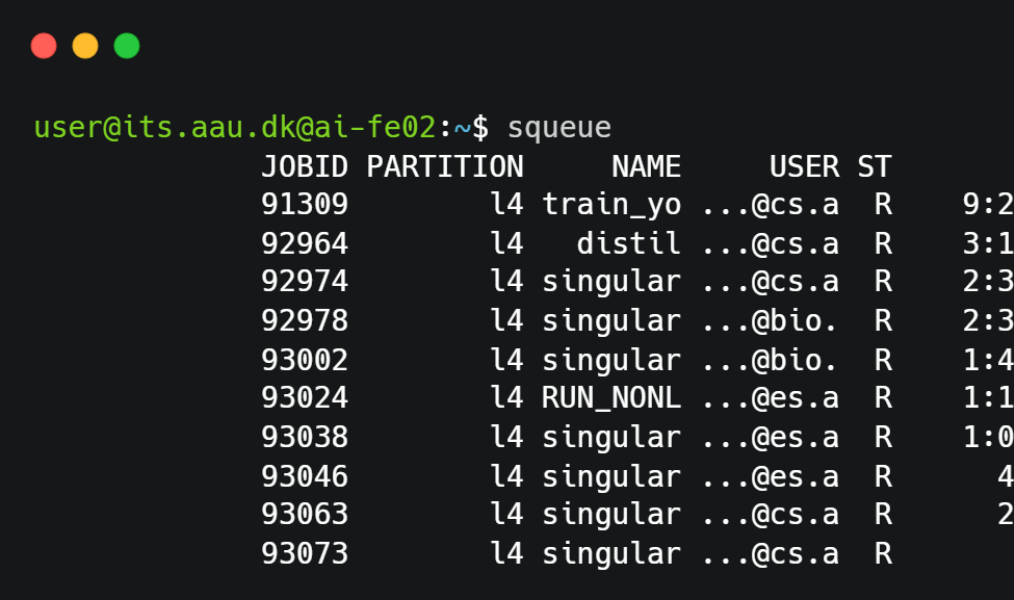

Efficient Batch Processing

AI Cloud uses Slurm for seamless job scheduling, enabling easy batch processing and background task management.

Common Use Cases

Training deep learning models

GPU access for AI projects

Fine-tuning large language models

Training speech models for PhD

AI research with CT images

Drug discovery acceleration

MI models for question answering

Knowledge graph embedding models

Machine vision system development

Important Information

Not for confidential or sensitive data

With AI Cloud you are only allowed to work with level 1 data according to AAU’s data classification model.

If you would like to work with level 2 or 3 data, then we support using another HPC platform called UCloud.

Not suitable for CPU-only computational tasks

The powerful GPU processors allow users to process large datasets much more efficiently than would be the case with pure CPU processing - given that your application can be parallelised in a GPU compatible manner. At the same time, the AI Cloud platform is not designed for CPU-only computational tasks, and we have alternative recommended platforms, such as UCloud or Strato for those needs.

Review the terms and conditions

Before getting started, take a few moments to review the terms and conditions of using AI Cloud, and don't hesitate to reach out to our support team if you have any questions or concerns.